How to Backup PostgreSQL Database to DigitalOcean Spaces with Bash

Introduction

DigitalOcean Spaces provides an S3-compatible object storage service that allows you to store and serve large amounts of data. It is an alternate solution to AWS S3 and offers a simple, affordable, and predictable pricing. For $5 per month, you will get 250GB with 1TB of bandwidth. After this, you are billed $0.02 per GB for storage and $0.01 per GB for transfer per month.

SnapShooter provides a web-based backup solution that provides a user-friendly control panel to manage and automate the PostgreSQL database via a web browser. SnapShooter works with a PostgreSQL server hosted on your own server as well as PostgreSQL managed in a DigitalOcean Managed databases.

This post will show you how to backup PostgreSQL databases to DigitalOcean Spaces manually and using SnapShooter.

Create Your DigitalOcean Spaces

Before starting, you will need to create a new space on the DigitalOcean. Follow the below steps to create a new space on the DigitalOcean:

Step 1 - Sign in to your DigitalOcean account and click on the Spaces in the left pane.

Step 2 - Click on the Create => Spaces from the right side of the DigitalOcean control panel.

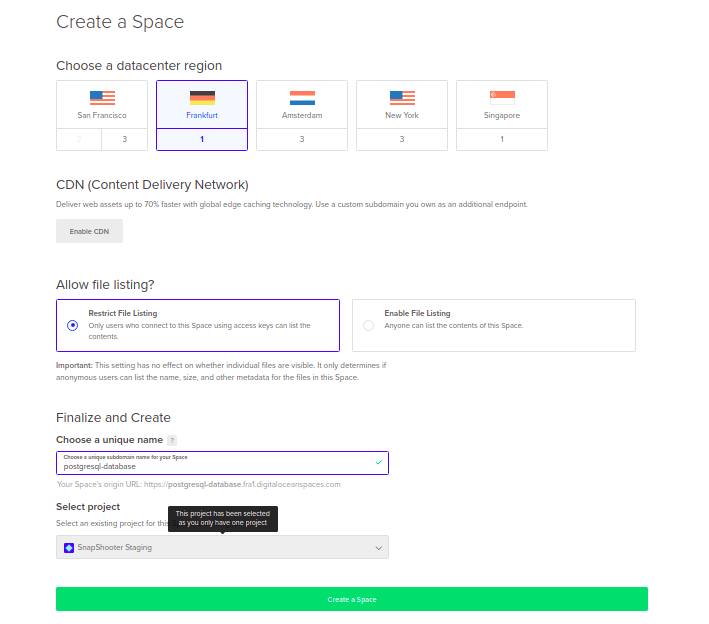

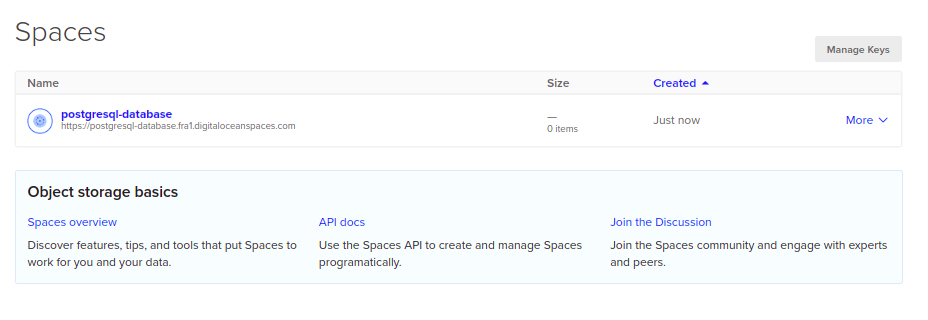

Step 3 - Select your region, Restrict File Listing, Provide your unique space name and click on Create a Space button. Once the Space is created, you should see the following screen:

Create Your DigitalOcean Credentials

In order to create backups with DigitalOcean spaces, you will need the correct access key and secret key to authenticate with it. Follow the below steps to create a DigitalOcean Credentials:

Step 1 - Log in to your DigitalOcean Spaces account or go directly to https://cloud.digitalocean.com/spaces:

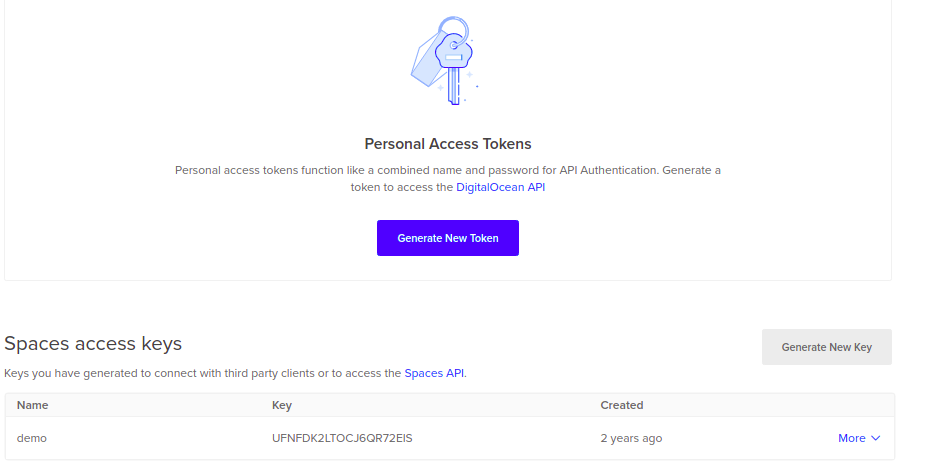

Step 2 - Click on the Manage Keys button on the right-hand side of the Spaces screen.

Step 3 - Click on the Generate New Key next to Spaces access keys. You should see the following screen:

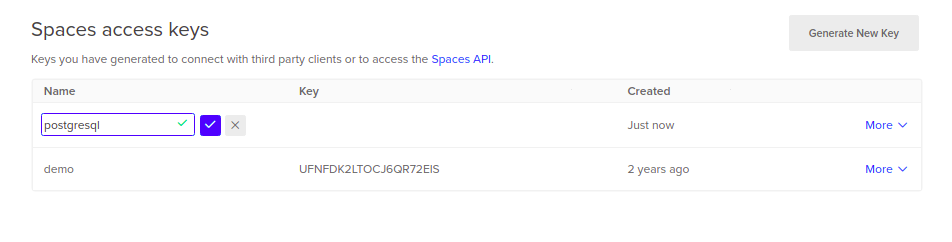

Step 4 - Provide a name for your key and click the checkmark. A new Key and Secret will be generated as shown below:

Backup PostgreSQL Database to DigitalOcean Space Manually

In this section, we will show you how to back up a PostgreSQL database from your server to the DigitalOcean Space using the S3cmd tool.

Install S3cmd

Before starting, you will need to install the S3cmd tool on the server from where you want to back up your PostgreSQL database.

S3cmd is a free and open-source command-line tool that allows you to upload, download and manage data from your server to DigitalOcean Space and other cloud storage service providers. Follow the below steps to install the S3cmd to your server.

First, install the Python and other dependencies by running the following command:

apt-get install python3 python3-setuptools curl -yNext, download the latest version of S3cmd using the following command:

curl -LO https://github.com/s3tools/s3cmd/releases/download/v2.2.0/s3cmd-2.2.0.tar.gzNext, extract the downloaded file with the following command:

tar -xvzf s3cmd-2.2.0.tar.gzNext, navigate to the extracted directory and install it using the following command:

cd s3cmd-2.2.0python3 setup.py installOnce the S3cmd is installed, verify the S3cmd version using the following command:

s3cmd --versionYou will get the following output:

s3cmd version 2.2.0Configure S3cmd

Next, you will need to configure the S3cmd using the DigitalOcean Access key and Secret key. You can configure it using the following command:

s3cmd --configureYou will be asked to provide your DigitalOcean Access Key, Secret Key, Region, and Endpoint as shown below:

Enter new values or accept defaults in brackets with Enter.Refer to user manual for detailed description of all options. Access key and Secret key are your identifiers for Amazon S3. Leave them empty for using the env variables.Access Key: Your-Access-KeySecret Key: Your-Secret-KeyDefault Region [US]: Frankfurt 1 Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.S3 Endpoint [s3.amazonaws.com]: fra1.digitaloceanspaces.com Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be usedif the target S3 system supports dns based buckets.DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]: %(bucket)s.fra1.digitaloceanspaces.com Encryption password is used to protect your files from readingby unauthorized persons while in transfer to S3Encryption password: jethvaPath to GPG program: When using secure HTTPS protocol all communication with Amazon S3servers is protected from 3rd party eavesdropping. This method isslower than plain HTTP, and can only be proxied with Python 2.7 or newerUse HTTPS protocol [Yes]: On some networks all internet access must go through a HTTP proxy.Try setting it here if you can't connect to S3 directlyHTTP Proxy server name: New settings: Access Key: AQL6SGGWNDUTTLFW2B6I Secret Key: zy15cA7jTET5cHCFr4BnT72N5MtcXAeo/BpoM77OjNg Default Region: Frankfurt 1 S3 Endpoint: fra1.digitaloceanspaces.com DNS-style bucket+hostname:port template for accessing a bucket: %(bucket)s.fra1.digitaloceanspaces.com Encryption password: jethva Path to GPG program: None Use HTTPS protocol: True HTTP Proxy server name: HTTP Proxy server port: 0 Test access with supplied credentials? [Y/n] YPlease wait, attempting to list all buckets...Success. Your access key and secret key worked fine :-) Now verifying that encryption works...Not configured. Never mind. Save settings? [y/N] yConfiguration saved to '/root/.s3cfg'Once the S3cmd is configured, you can verify your bucket information using the following command:

s3cmd info s3://postgresql-database/You will get the following output:

s3://postgresql-database/ (bucket): Location: fra1 Payer: none Expiration Rule: none Policy: none CORS: none ACL: 6945323: FULL_CONTROLUse S3cmd to Backup PostgreSQL Database to DigitalOcean Spaces

First, log in to your server and connect to the PostgreSQL shell with the following command:

su - postgresqlpsqlOnce you are connected, list all PostgreSQL databases using the following command:

postgres=# \lYou will get all databases in the following output:

List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges-----------+----------+----------+-------------+-------------+----------------------- magentodb | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | studentdb | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres testdb | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | wpdb | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |(7 rows)Next, use the pg_dumpall tool to backup all PostgreSQL databases:

pg_dumpall -f all_pg_dbs.sqlNext, run the S3cmd command to copy the all_pg_dbs.sql file to the DigitalOcean Spaces:

s3cmd put all_pg_dbs.sql s3://postgresql-database/Output:

upload: 'all_pg_dbs.sql' -> 's3://postgresql-database/all_pg_dbs.sql' [1 of 1] 14793 of 14793 100% in 0s 28.44 KB/s doneYou can now verify all files on the DigitalOcean Spaces using the following command:

s3cmd ls s3://postgresql-databaseYou will get the following output:

2022-02-11 13:42 14793 s3://postgresql-database/all_pg_dbs.sqlYou can also log in to the DigitalOcean Spaces and verify your backup files as shown below:

Scheduled PostgreSQL Single Databases Backups SnapShooter

Backup a single PostgreSQL database to your external storage

Learn more about PostgreSQL Single Databases Backups

Get started for freeThank you for helping us improve!